I recently tweeted a screenshot of a GUI I created using WPF and PowerShell to let engineers choose the disk to install Windows on, intended to be used in a SCCM Task Sequence. I was then asked by (none other than!) David Segura to share this with the rest of the community.

In my last post I wrote about how I found a workaround to a snag I hit upon while using the MahApps.Metro theme. That was almost 10 months ago. That post was meant to be a precursor to introducing this GUI but I got busy with life and my new job so the blog took a back seat. I’m glad that David’s reply has spurred me on to write this post and introduce the GUI. (I also have a few more posts lined up inspired by Gary Blok’s endeavour to break away from MDT and go native ConfigMgr. More on that soon.)

Update 1: I included steps in the Task Sequence to make the GUI appear only if more than one disk is present, as suggested by Marcel Moerings in the comments.

Update 2: I updated the script to exclude USB drives.

Background

SCCM will install the OS on disk 0 by default. In my previous environment two disk configurations were very common so I created this GUI for Engineers to choose the disk to install Windows on. This works by leveraging the “OSDDiskIndex” task sequence variable. If you set this variable to your desired disk number then SCCM will install the OS on that disk.

This is what the GUI looks like when run in full OS:

And this is what it looks like when run in a Task Sequence:

Prerequisites

You will need to add the following components to your Boot Image:

-

Windows PowerShell (WinPE-PowerShell)

-

Windows PowerShell (WinPE-StorageWMI)

-

Microsoft .Net (WinPE Dot3Svc)

How to Implement

Simples :)

-

-

Create a standard package with the contents of the zip file. Do not create a program.

-

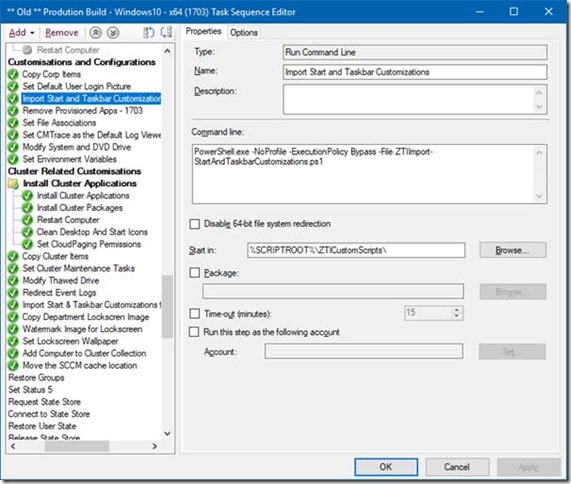

In your task sequence add a Group called “Choose Disk” before the partitioning steps

-

Within the group add a Run Command Line task and name it “Check if there’s more than one Hard Disk”. Enter the following one-liner:

PowerShell.exe -NoProfile -Command "If ((Get-Disk | Where-Object -FilterScript {$_.Bustype -ne 'USB'}).Count -gt 1) {$TSEnv = New-Object -COMObject Microsoft.SMS.TSEnvironment;$TSEnv.Value('MoreThanOneHD') = $true}"

- Add another Run Command Line step and name it “Choose Disk to Install OS”, and choose the package you created. Add the following command line:

%SYSTEMROOT%\System32\WindowsPowerShell\v1.0\powershell.exe -STA -NoProfile -ExecutionPolicy Bypass -File .\ChooseDiskWPF.ps1

- In the Options tab for the “”Choose Disk to Install OS” step, Click on Add Condition > Task Sequence Variable > type “MoreThanOneHD” in the variable field, set the condition to “equals” and the value to “TRUE”

Bear in mind that this does not exclude removable drives. This is because I always run a set of pre-flight checks as a first step which weed out any removable drives before this GUI is presented hence looking out for removable drives was not duplicated in this solution.

The Code

Clear-Host

# Assign current script directory to a global variable

$Global:MyScriptDir = [System.IO.Path]::GetDirectoryName($myInvocation.MyCommand.Definition)

# Load presentationframework and Dlls for the MahApps.Metro theme

[System.Reflection.Assembly]::LoadWithPartialName(“presentationframework”) | Out-Null

[System.Reflection.Assembly]::LoadFrom(“$Global:MyScriptDir\assembly\System.Windows.Interactivity.dll”) | Out-Null

[System.Reflection.Assembly]::LoadFrom(“$Global:MyScriptDir\assembly\MahApps.Metro.dll”) | Out-Null

# Temporarily close the TS progress UI

$TSProgressUI = New-Object –COMObject Microsoft.SMS.TSProgressUI

$TSProgressUI.CloseProgressDialog()

# Set console size and title

$host.ui.RawUI.WindowTitle = “Choose hard disk…”

Function LoadForm {

[CmdletBinding()]

Param(

[Parameter(Mandatory=$True,Position=1)]

[string]$XamlPath

)

# Import the XAML code

[xml]$Global:xmlWPF = Get-Content -Path $XamlPath

# Add WPF and Windows Forms assemblies

Try {

Add-Type –AssemblyName PresentationCore,PresentationFramework,WindowsBase,system.windows.forms

}

Catch {

Throw “Failed to load Windows Presentation Framework assemblies.”

}

#Create the XAML reader using a new XML node reader

$Global:xamGUI = [Windows.Markup.XamlReader]::Load((new-object System.Xml.XmlNodeReader $xmlWPF))

#Create hooks to each named object in the XAML

$xmlWPF.SelectNodes(“//*[@Name]”) | ForEach {

Set-Variable -Name ($_.Name) -Value $xamGUI.FindName($_.Name) -Scope Global

}

}

Function Get-SelectedDiskInfo {

# Get the selected disk with the model which matches the model selected in the List Box

$SelectedDisk = Get-Disk | Where-Object { $_.Number –eq $Global:ArrayOfDiskNumbers[$ListBox.SelectedIndex] }

# Unhide the disk information labels

$DiskInfoLabel.Visibility = “Visible”

$DiskNumberLabel.Visibility = “Visible”

$SizeLabel.Visibility = “Visible”

$HealthStatusLabel.Visibility = “Visible”

$PartitionStyleLabel.Visibility = “Visible”

# Populate the labels with the disk information

$DiskNumber.Content = “$($SelectedDisk.Number)“

$HealthStatus.Content = “$($SelectedDisk.HealthStatus), $($SelectedDisk.OperationalStatus)“

$PartitionStyle.Content = $SelectedDisk.PartitionStyle

# Work out if the size should be in GB or TB

If ([math]::Round(($SelectedDisk.Size/1TB),2) –lt 1) {

$Size.Content = “$([math]::Round(($SelectedDisk.Size/1GB),0)) GB”

}

Else {

$Size.Content = “$([math]::Round(($SelectedDisk.Size/1TB),2)) TB”

}

}

# Load the XAML form and create the PowerShell Variables

LoadForm –XamlPath “$MyScriptDir\ChooseDiskXAML.xaml“

# Create empty array of hard disk numbers

$Global:ArrayOfDiskNumbers = @()

# Populate the listbox with hard disk models and the array with disk numbers

Get-Disk | Where-Object -FilterScript {$_.Bustype -ne ‘USB’} | Sort-Object {$_.Number}| ForEach {

# Add item to the List Box

$ListBox.Items.Add($_.Model) | Out-Null

# Add the serial number to the array

$ArrayOfDiskNumbers += $_.Number

}

# EVENT Handlers

$OKButton.add_Click({

# If no disk is selected in the ListBox then do nothing

If (-not ($ListBox.SelectedItem)) {

# Do nothing

}

Else {

# Else If a disk is selected then get the disk with matching disk number according to the ListBox selection

$Disk = Get-Disk | Where-Object {$_.Number –eq $Global:ArrayOfDiskNumbers[$ListBox.SelectedIndex]}

# Set the Task Sequence environment object

$TSEnv = New-Object –COMObject Microsoft.SMS.TSEnvironment

# Populate the OSDDiskIndex variable with the disk number

$TSEnv.Value(“OSDDiskIndex“) = $Disk.Number

# Close the WPF GUI

$xamGUI.Close()

}

})

$ListBox.add_SelectionChanged({

# Call function to pull the disk informaiton and populate the details on the form

Get-SelectedDiskInfo

})

# Launch the window

$xamGUI.ShowDialog() | Out-Null

![image_thumb[10] image_thumb[10]](https://emeneye.files.wordpress.com/2021/08/image_thumb10_thumb.png?w=836&h=227)